I’m working on a project to really get Rust under my fingers. It uses Diesel and Axum to provide database and web functionality. As a containerization fan, I wanted to get the code into a place where it was barely functional, and then get it running in containers. As with many things programming, getting the containerization portion completed took some research, trying, failing, crying, gnashing teeth, and every other show of frustration I could find at hand.

In order to ease the pain for others, I’m including my setup here. I have not set up Diesel’s embedded_migration macro, but will write that up when it’s working. In this example, we’re mostly using docker to do the heavy lifting.

The Setup

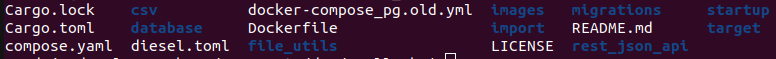

Overall, the setup looks like the following.

Here we see that we’ve added a single Dockerfile. This file is for the Rust server. We’ve added our compose.yaml, which contains the overall config for the Rust server, the database server, a Postgres admin server, and finally our network config. Outside of these files, there is no other setup.

The Dockerfile

Here is the code from the Dockerfile.

FROM rust

WORKDIR /code

EXPOSE 3000

COPY . .

RUN curl https://github.com/diesel-rs/diesel/releases/download/v2...

RUN cargo build

CMD bash -c "diesel setup && cargo run -- run"FROM rust tells us to use a rust docker image. We’ll probably go back and use one of the slim images, but for our initial run this will do.

WORKDIR installs the code into the /code directory.

EXPOSE 3000 exposes port 3000, which we have configured as our API port. This would change based on what port we are serving our app at.

COPY duplicates all of the files in the directory over to the server. There’s a better way to do this, which we’ll explain later. But for this example, pulling the code into the image is fine.

The first RUN line grabs the diesel-cli. This is going to be used in this example to run our setup and migrations on the database. We can use diesel’s embed_migrations macro for this, but for this example running the setup and migrations from the command line will work.

The second run line should look familiar. We’re building our cargo code. In production, we would set up a docker compose pipeline to build the executable and only push that to our docker container, but for our development this will work just fine.

The last line, CMD, finally runs our executable. First, we run diesel setup (again, we should look at embed_migrations), then we run our cargo app. The — run addition satisfies a command line run command that I have built into the app itself. You might not need this if you aren’t passing in any command line arguments.

The compose.yaml

We’ll take this block by block. We will not go line by line here, as most of this is standard docker compose, but we’ll touch on a few points in each section.

services:

db:

image: postgres:alpine

restart: always

environment:

POSTGRES_PASSWORD: changeme # don't do this

PGUSER: postgres

healthcheck:

test: ["CMD-SHELL", "pg_isready"]

interval: 1s

timeout: 5s

retries: 10

ports:

- 5432:5432

networks:

- backend

adminer:

image: adminer

restart: always

ports:

- 8080:8080

networks:

- backend

We start out by defining our database config, db, and our postgres administration server, adminer. Some OK practices, we have the server restart on fail, as well as add a healthcheck. We add a bridge network, defined later, so our backend will be isolated from any other containers.

api:

restart: always

build: .

# make sure our DB is up and running before building

depends_on:

db:

condition: service_healthy

ports:

- 3000:3000

# need to play with this, let the app respawn when changed

develop:

watch:

- action: sync

path: .

target: /code

networks:

- backendAPI is the server defined in our Dockerfile. Again we restart on fail. The build tag let’s docker know to use a Dockerfile in the current directory. If we had multiple build files, we could be more explicit here. Depends on ensures that our database is up and returning a health status before starting our API server. If our database server tag was something other than db, we would use that tag instead. I’ve seen folks add shell scripts to ensure the database server is up, and I don’t understand why.

I’ve added the develop:watch tag, but haven’t used it, so for now you could remove that tag. It allows us to watch our local directory files for changes, and sync them to the server so we get the latest version without rebuilding the server.

Lastly, we have the API server join the same network as our database and admin servers.

# allow our backend apps to talk to each other

networks:

backend:

driver: bridgeThis section should be self explanatory. We set up a bridge network called backend, that our backend servers join.

The Rust Change

Within Rust there is one minor change. The connect URI from our .env file for the database, which was

DATABASE_URL=postgres://user:password@localhost/db_namenow has the tag for the database container as the host name. This is standard for docker, to have the hostname as the tag name in the compose.yaml file. So if we changed the name of the config for the database from db to something else like postgres_db, we would change the host name as well.

DATABASE_URL=postgres://user:password@db/db_nameLet’s Run It!

With this setup, we run our docker commands from the top level directory.

$ docker compose up --force-recreateI use the –force-recreate option to ensure old containers are removed before building our new. Here’s some of the truncated output.

0 building with "default" instance using docker driver

#1 [api internal] load build definition from Dockerfile

#1 transferring dockerfile: 391B done

#1 DONE 0.0s

#2 [api internal] load metadata for docker.io/library/rust:latest

#2 DONE 0.0s

#3 [api internal] load .dockerignore

#3 transferring context: 2B done

#3 DONE 0.0s

#4 [api 1/5] FROM docker.io/library/rust:latest

#4 DONE 0.0s

#5 [api internal] load build context

#5 transferring context: 818.66kB 0.1s done

#5 DONE 0.2s

#6 [api 2/5] WORKDIR /code

#6 CACHED

#7 [api 3/5] COPY . .

#7 DONE 2.7s

#8 [api 4/5] RUN curl --proto '=https' --tlsv1.2 -LsSf https://github.com/diesel-rs/diesel/releases/download/v2.2.1/diesel_cli-installer.sh | sh

#8 0.580 downloading diesel_cli 2.2.1 x86_64-unknown-linux-gnu

#8 1.402 installing to /usr/local/cargo/bin

#8 1.404 diesel

#8 1.404 everything's installed!

...

...

...

#9 [api 5/5] RUN cargo build

#9 0.294 Updating crates.io index

...

...

...

#9 DONE 25.1s

#10 [api] exporting to image

#10 exporting layers

#10 exporting layers 6.4s done

#10 DONE 6.5s

Attaching to adminer-1, api-1, db-1

...

...

...

api-1 | Run this app.

api-1 | Starting RESTful API server

api-1 | -----------------------------------------------

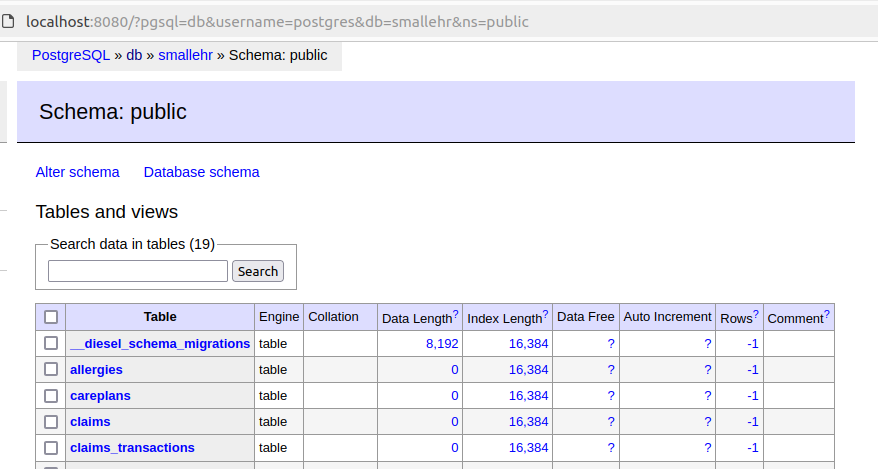

api-1 | Listening on Ok(0.0.0.0:3000)Here is the adminer container, as I connect from my local machine.

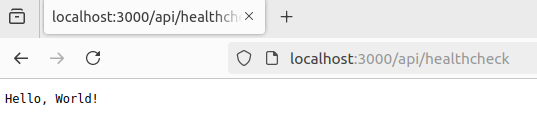

And here is a screenshot of the healthcheck API output from the Rust/Axum server.

Conclusion

There’s a bit of work to be done here, as noted along the way. I’m sure there will be more optimizations that I’ll find as I work though the project. For now, this setup works for me.

I hope this was helpful in understanding how to get a docker setup running with an API server written in Rust, a PostgreSQL database, and an adminer db admin server.